Databricks-Certified-Data-Engineer-Associate Exam Questions - Online Test

Databricks-Certified-Data-Engineer-Associate Premium VCE File

150 Lectures, 20 Hours

Proper study guides for Renew Databricks Databricks Certified Data Engineer Associate Exam certified begins with Databricks Databricks-Certified-Data-Engineer-Associate preparation products which designed to deliver the High quality Databricks-Certified-Data-Engineer-Associate questions by making you pass the Databricks-Certified-Data-Engineer-Associate test at your first time. Try the free Databricks-Certified-Data-Engineer-Associate demo right now.

Also have Databricks-Certified-Data-Engineer-Associate free dumps questions for you:

NEW QUESTION 1

An engineering manager wants to monitor the performance of a recent project using a Databricks SQL query. For the first week following the project’s release, the manager wants the query results to be updated every minute. However, the manager is concerned that the compute resources used for the query will be left running and cost the organization a lot of money beyond the first week of the project’s release.

Which of the following approaches can the engineering team use to ensure the query does not cost the organization any money beyond the first week of the project’s release?

- A. They can set a limit to the number of DBUs that are consumed by the SQL Endpoint.

- B. They can set the query’s refresh schedule to end after a certain number of refreshes.

- C. They cannot ensure the query does not cost the organization money beyond the first week of the project’s release.

- D. They can set a limit to the number of individuals that are able to manage the query’s refresh schedule.

- E. They can set the query’s refresh schedule to end on a certain date in the query scheduler.

Answer: E

Explanation:

If a dashboard is configured for automatic updates, it has a Scheduled button at the top, rather than a Schedule button. To stop automatically updating the dashboard and remove its subscriptions:

Click Scheduled.

In the Refresh every drop-down, select Never.

Click Save. The Scheduled button label changes to Schedule. Source:https://learn.microsoft.com/en-us/azure/databricks/sql/user/dashboards/

NEW QUESTION 2

Which of the following is stored in the Databricks customer's cloud account?

- A. Databricks web application

- B. Cluster management metadata

- C. Repos

- D. Data

- E. Notebooks

Answer: D

NEW QUESTION 3

Which of the following commands will return the location of database customer360?

- A. DESCRIBE LOCATION customer360;

- B. DROP DATABASE customer360;

- C. DESCRIBE DATABASE customer360;

- D. ALTER DATABASE customer360 SET DBPROPERTIES ('location' = '/user'};

- E. USE DATABASE customer360;

Answer: C

Explanation:

To retrieve the location of a database named "customer360" in a database management system like Hive or Databricks, you can use the DESCRIBE DATABASE command followed by the database name. This command will provide information about the database, including its location.

NEW QUESTION 4

A data engineer has configured a Structured Streaming job to read from a table, manipulate the data, and then perform a streaming write into a new table.

The code block used by the data engineer is below:

If the data engineer only wants the query to process all of the available data in as many batches as required, which of the following lines of code should the data engineer use to fill in the blank?

- A. processingTime(1)

- B. trigger(availableNow=True)

- C. trigger(parallelBatch=True)

- D. trigger(processingTime="once")

- E. trigger(continuous="once")

Answer: B

Explanation:

https://stackoverflow.com/questions/71061809/trigger-availablenow-for-delta- source-streaming-queries-in-pyspark-databricks

NEW QUESTION 5

A data analyst has created a Delta table sales that is used by the entire data analysis team. They want help from the data engineering team to implement a series of tests to ensure the data is clean. However, the data engineering team uses Python for its tests rather than SQL.

Which of the following commands could the data engineering team use to access sales in PySpark?

- A. SELECT * FROM sales

- B. There is no way to share data between PySpark and SQL.

- C. spark.sql("sales")

- D. spark.delta.table("sales")

- E. spark.table("sales")

Answer: E

Explanation:

https://spark.apache.org/docs/3.2.1/api/python/reference/api/pyspark.sql.SparkSession.tabl e.html

NEW QUESTION 6

Which of the following describes when to use the CREATE STREAMING LIVE TABLE (formerly CREATE INCREMENTAL LIVE TABLE) syntax over the CREATE LIVE TABLE syntax when creating Delta Live Tables (DLT) tables using SQL?

- A. CREATE STREAMING LIVE TABLE should be used when the subsequent step in the DLT pipeline is static.

- B. CREATE STREAMING LIVE TABLE should be used when data needs to be processed incrementally.

- C. CREATE STREAMING LIVE TABLE is redundant for DLT and it does not need to be used.

- D. CREATE STREAMING LIVE TABLE should be used when data needs to be processed through complicated aggregations.

- E. CREATE STREAMING LIVE TABLE should be used when the previous step in the DLT pipeline is static.

Answer: B

Explanation:

The CREATE STREAMING LIVE TABLE syntax is used when you want to create Delta Live Tables (DLT) tables that are designed for processing data incrementally. This is typically used when your data pipeline involves streaming or incremental data updates, and you want the table to stay up to date as new data arrives. It allows you to define tables that can handle data changes incrementally without the need for full table refreshes.

NEW QUESTION 7

A data engineer has realized that the data files associated with a Delta table are incredibly small. They want to compact the small files to form larger files to improve performance.

Which of the following keywords can be used to compact the small files?

- A. REDUCE

- B. OPTIMIZE

- C. COMPACTION

- D. REPARTITION

- E. VACUUM

Answer: B

Explanation:

OPTIMIZE can be used to club small files into 1 and improve performance.

NEW QUESTION 8

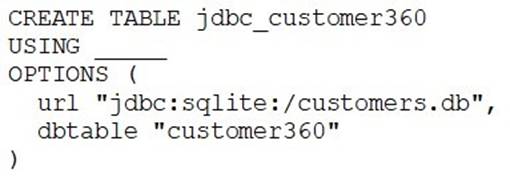

A data engineer needs to create a table in Databricks using data from their organization’s existing SQLite database.

They run the following command:

Which of the following lines of code fills in the above blank to successfully complete the task?

- A. org.apache.spark.sql.jdbc

- B. autoloader

- C. DELTA

- D. sqlite

- E. org.apache.spark.sql.sqlite

Answer: A

Explanation:

CREATE TABLE new_employees_table USING JDBC

OPTIONS (

url "<jdbc_url>",

dbtable "<table_name>", user '<username>', password '<password>'

) AS

SELECT * FROM employees_table_vw https://docs.databricks.com/external-data/jdbc.html#language-sql

NEW QUESTION 9

A new data engineering team team has been assigned to an ELT project. The new data engineering team will need full privileges on the table sales to fully manage the project.

Which of the following commands can be used to grant full permissions on the database to the new data engineering team?

- A. GRANT ALL PRIVILEGES ON TABLE sales TO team;

- B. GRANT SELECT CREATE MODIFY ON TABLE sales TO team;

- C. GRANT SELECT ON TABLE sales TO team;

- D. GRANT USAGE ON TABLE sales TO team;

- E. GRANT ALL PRIVILEGES ON TABLE team TO sales;

Answer: A

NEW QUESTION 10

Which of the following must be specified when creating a new Delta Live Tables pipeline?

- A. A key-value pair configuration

- B. The preferred DBU/hour cost

- C. A path to cloud storage location for the written data

- D. A location of a target database for the written data

- E. At least one notebook library to be executed

Answer: E

Explanation:

https://docs.databricks.com/en/delta-live-tables/tutorial-pipelines.html

NEW QUESTION 11

Which of the following describes the relationship between Gold tables and Silver tables?

- A. Gold tables are more likely to contain aggregations than Silver tables.

- B. Gold tables are more likely to contain valuable data than Silver tables.

- C. Gold tables are more likely to contain a less refined view of data than Silver tables.

- D. Gold tables are more likely to contain more data than Silver tables.

- E. Gold tables are more likely to contain truthful data than Silver tables.

Answer: A

Explanation:

In some data processing pipelines, especially those following a typical "Bronze-Silver-Gold" data lakehouse architecture, Silver tables are often considered a more refined version of the raw or Bronze data. Silver tables may include data cleansing, schema enforcement, and some initial transformations. Gold tables, on the other hand, typically represent a stage where data is further enriched, aggregated, and processed to provide valuable insights for analytical purposes. This could indeed involve more aggregations compared to Silver tables.

NEW QUESTION 12

Which of the following describes the relationship between Bronze tables and raw data?

- A. Bronze tables contain less data than raw data files.

- B. Bronze tables contain more truthful data than raw data.

- C. Bronze tables contain aggregates while raw data is unaggregated.

- D. Bronze tables contain a less refined view of data than raw data.

- E. Bronze tables contain raw data with a schema applied.

Answer: E

Explanation:

The Bronze layer is where we land all the data from external source systems. The table structures in this layer correspond to the source system table structures "as-is," along with any additional metadata columns that capture the load date/time, process ID, etc. The focus in this layer is quick Change Data Capture and the ability to provide an historical archive of source (cold storage), data lineage, auditability, reprocessing if needed without rereading the data from the source system.https://www.databricks.com/glossary/medallion- architecture#:~:text=Bronze%20layer%20%28raw%20data%29

NEW QUESTION 13

A data engineer has a Python notebook in Databricks, but they need to use SQL to accomplish a specific task within a cell. They still want all of the other cells to use Python without making any changes to those cells.

Which of the following describes how the data engineer can use SQL within a cell of their Python notebook?

- A. It is not possible to use SQL in a Python notebook

- B. They can attach the cell to a SQL endpoint rather than a Databricks cluster

- C. They can simply write SQL syntax in the cell

- D. They can add %sql to the first line of the cell

- E. They can change the default language of the notebook to SQL

Answer: D

NEW QUESTION 14

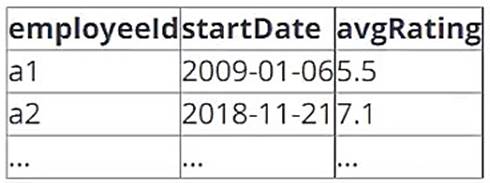

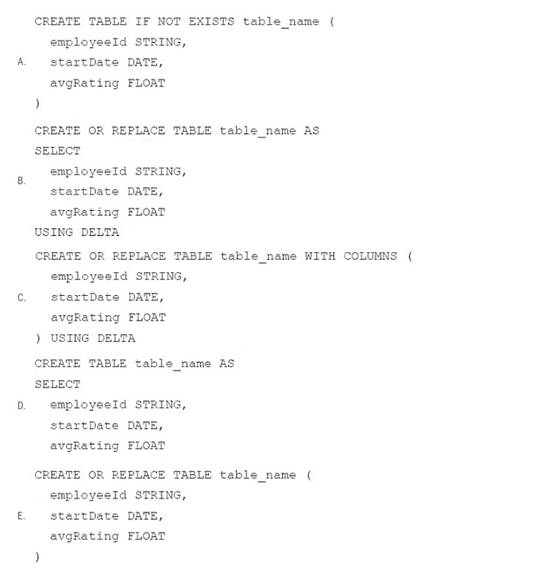

A data architect has determined that a table of the following format is necessary:

Which of the following code blocks uses SQL DDL commands to create an empty Delta table in the above format regardless of whether a table already exists with this name?

- A. Option A

- B. Option B

- C. Option C

- D. Option D

- E. Option E

Answer: E

NEW QUESTION 15

A data engineer has three tables in a Delta Live Tables (DLT) pipeline. They have configured the pipeline to drop invalid records at each table. They notice that some data is being dropped due to quality concerns at some point in the DLT pipeline. They would like to determine at which table in their pipeline the data is being dropped.

Which of the following approaches can the data engineer take to identify the table that is dropping the records?

- A. They can set up separate expectations for each table when developing their DLT pipeline.

- B. They cannot determine which table is dropping the records.

- C. They can set up DLT to notify them via email when records are dropped.

- D. They can navigate to the DLT pipeline page, click on each table, and view the data quality statistics.

- E. They can navigate to the DLT pipeline page, click on the “Error” button, and review the present errors.

Answer: D

Explanation:

To identify the table in a Delta Live Tables (DLT) pipeline where data is being dropped due to quality concerns, the data engineer can navigate to the DLT pipeline page, click on each table in the pipeline, and view the data quality statistics. These statistics often include information about records dropped, violations of expectations, and other data quality metrics. By examining the data quality statistics for each table in the pipeline, the data engineer can determine at which table the data is being dropped.

NEW QUESTION 16

......

P.S. Easily pass Databricks-Certified-Data-Engineer-Associate Exam with 87 Q&As Certleader Dumps & pdf Version, Welcome to Download the Newest Certleader Databricks-Certified-Data-Engineer-Associate Dumps: https://www.certleader.com/Databricks-Certified-Data-Engineer-Associate-dumps.html (87 New Questions)